King Æthelstan Project

A downloadable game

The King Æthelstan Project is a mixed reality experience where the user can interact with a life-sized statue of King Æthelstan. This includes being able to ask him any question about his life or views by bowing in front of him, and admiring the intricate details of the statue and its animated movements. Tied together through the detailed soundscape, resonating a medieval feel.

As the lead programmer, I coordinated the coding section of the project and was the primary person communicating with the modelling and sound team; ensuring their work was effectively implemented in the end product.

During the Kingston’s Athel’s Town festivities at the end of July, more than 100 visitors enjoyed the immersive experience. There was an enthusiastic reaction at the beautifully detailed digital statue of King Æthelstan and his dog, wagging the tail. Thanks to group effort, visitors could ask any questions when approaching the king with a respectful bow. This was achieved thanks to the use of OpenAI technology.

I was part of this project for a 2-month summer internship, affiliated with Kingston University.

Further info about the project: https://kingathelstan.info/

Implementations

On top of integrating the work of all other members inside the project, I worked on many aspects of the code and gained skills on several new tools; such as the MetaSDK, FMod Integration in VR and Azure services for text-to-speech, lypsincying and OpenAI ChatGPT responses.

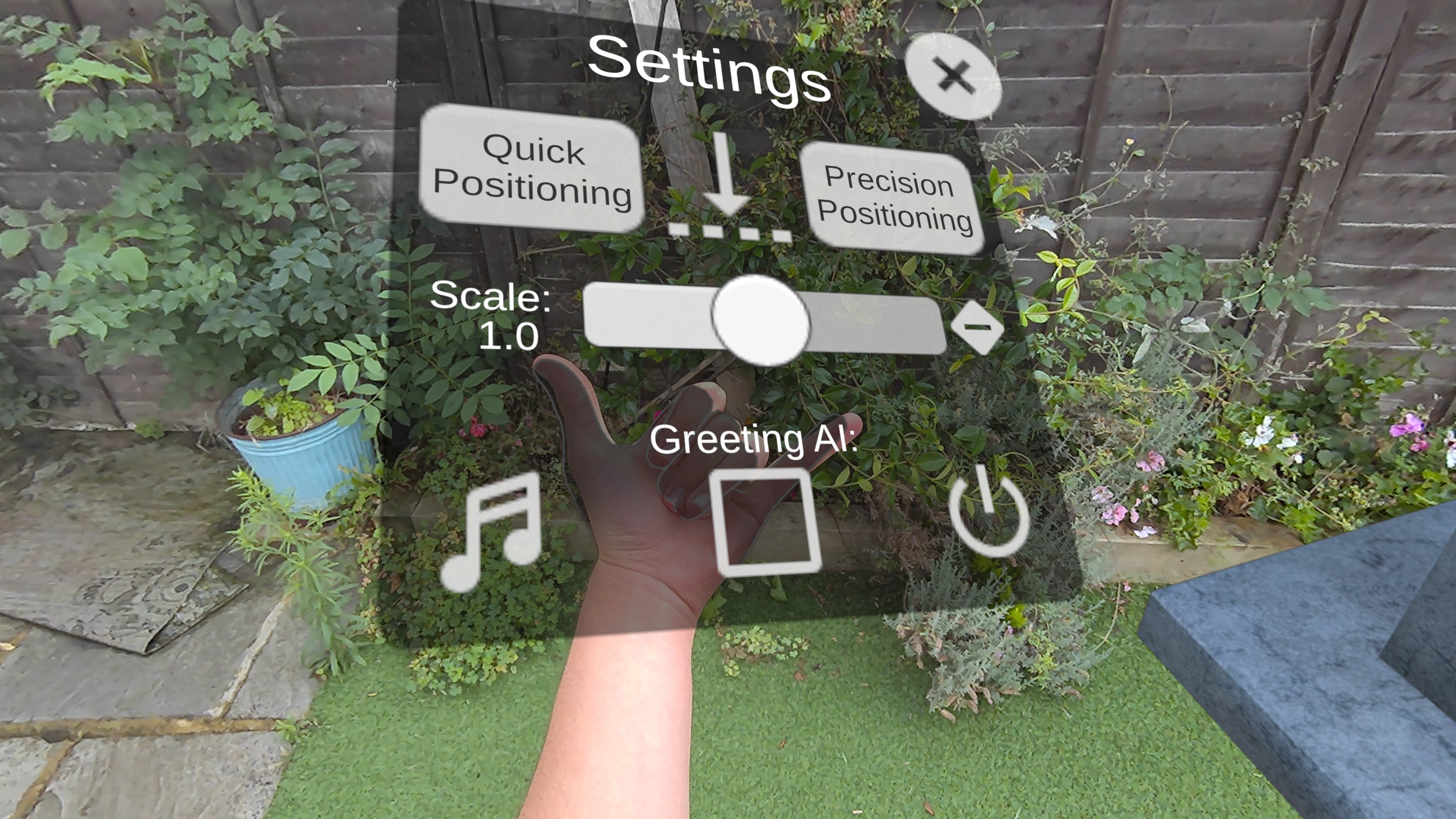

One of the first aspects I implemented was the user interface used to position the statue and scale it. This utilises a pointable canvas, enabling the user to press on buttons using their index finger.

As this menu should not be accessible by someone that is currently in the experience, I implemented a specific hand gesture into a pass code to show the menu. To detect the hand gesture, I used the built in hand tracking of the Meta Quest 3.

The most impressive part of the project was the creation of the sequence of being able to bow in front of the king to get a response, and get a chance to ask him any question.

To create the bowing detection, I used the MetaSDK body tracking and recorded several positions that the program can compare to. At any point the user does these positions, the program will initialise the conversation with the king.

Once the conversation starts, the program generates an initial greeting for the king to say. This is done by sending the initial context to the OpenAI Azure services, with details on who the AI is supposed to be, where it is and how the statue looks like.

Then the user can ask their question, which the program understands using the Dictation within the MetaSDK. then this text is sent to the AI, and a response is yet again generated.

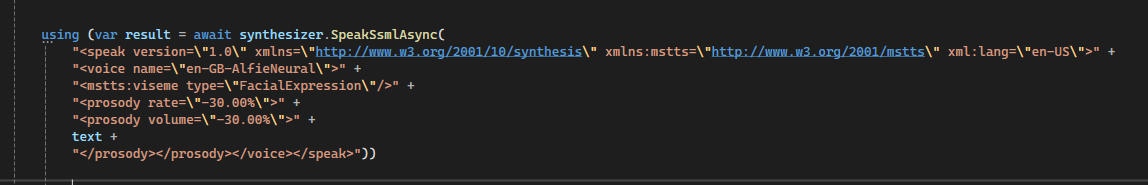

For the king to talk, I implemented a text-to-speech system with live lip-syncing. To do this I used the SpeechSDK provided from Azure, which was installed with NuGet. The code above shows the initialisation of the synthesizer, where all the options for the voice are set up, including which voice is used. Most importantly, the code also instructs the synthesiser that the program should keep track of visemes and outputs which face expressions the king should make when speaking. This output is used to apply the correct blend shape to the king's mouth in real-time.

A demonstration of all of this can be found in the video on the top right of the web page.

Lastly, another aspect that I implemented is the integration of FMod within the Unity project. To achieve spatial audio in VR, I installed the Meta XR Audio plugin. Furthermore, the solution contains parameters to cross fade between different music tracks, depending on whether the king is talking or not.

| Status | Released |

| Author | Matteo Marelli |

Leave a comment

Log in with itch.io to leave a comment.